Compress with level 0 means no compression, split then into 500 MB per slice.

zip -0 -s 500m InstallESD InstallESD.dmgTo merge/unzip them:

zip -FF InstallESD.zip --out InstallESD-full.zipMain blog category, I’m too lazy to manage multiple categories so this blog has only one category.

Compress with level 0 means no compression, split then into 500 MB per slice.

zip -0 -s 500m InstallESD InstallESD.dmgTo merge/unzip them:

zip -FF InstallESD.zip --out InstallESD-full.zipRFC 4408 3.1.3 says

....

IN TXT "v=spf1 .... first" "second string..."

MUST be treated as equivalent to

IN TXT "v=spf1 .... firstsecond string..."

SPF or TXT records containing multiple strings are useful in

constructing records that would exceed the 255-byte maximum length of

a string within a single TXT or SPF RR record.

so if you are getting error “TXTRDATATooLong” a solution for you will be splitting it into multiple strings within the same record set. For example, instead of:

"v=DKIM1; k=rsa; g=*; s=email; h=sha1; t=s; p=MIGfMA0GCSqGSIb3DQEBAQUAA4GNADCBiQKBgQDx2zIlneFcE2skbzXjq5GudbHNntCGNN9A2RZGC/trRpTXzT/+oymxCytrEsmrwtvKdbTnkkWOxSEUcwU2cffGeaMxgZpONCu+qf5prxZCTMZcHm9p2CwCgFx3

reSF+ZmoaOvvgVL5TKTzYZK7jRktQxPdTvk3/yj71NQqBGatLQIDAQAB;" you can pick a split point where each part is less than 255 characters long and put [double quote][space][double quote]

for example I tried:

"v=DKIM1; k=rsa; g=*; s=email; h=sha1; t=s; p=MIGfMA0GCSqGSIb3DQEBAQUAA4GNADCBiQKBgQDx2zIlneFcE2skbzXjq5GudbHNntCGNN9A2RZGC/trRpTXzT/+oymxCytrEsmrwtvKdbTnkkWOxSEUcwU2cffGeaMxgZpONCu+qf5prxZCT" "MZcHm9p2CwCgFx3reSF+ZmoaOvvgVL5TKTzYZK7jRktQxPdTvk3/yj71NQqBGatLQIDAQAB;"and as a result I’ve got:

dig -t TXT long.xxxxxx.yyyy @ns-iiii.awsdns-jj.org.

;; ANSWER SECTION:

long.xxxxxxx.yyyy. 300 IN TXT "v=DKIM1\; k=rsa\; g=*\; s=email\; h=sha1\; t=s\; p=MIGfMA0GCSqGSIb3DQEBAQUAA4GNADCBiQKBgQDx2zIlneFcE2skbzXjq5GudbHNntCGNN9A2RZGC/trRpTXzT/+oymxCytrEsmrwtvKdbTnkkWOxSEUcwU2cffGeaMxgZpONCu+qf5prxZCT" "MZcHm9p2CwCgFx3reSF+ZmoaOvvgVL5TKTzYZK7jRktQxPdTvk3/yj71NQqBGatLQIDAQAB\;"Note that returned TXT contains [double quote][space][double quote] , however the RFC above mandates that string to be treated as the same as concatenated one.

Note that your example does the same too on 128 character boundary

dig s2048._domainkey.yahoo.com TXT /workspace/stepany-HaasControlAPI-development

;; Truncated, retrying in TCP mode.

; <<>> DiG 9.4.2 <<>> s2048._domainkey.yahoo.com TXT

;; global options: printcmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 61356

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 5, ADDITIONAL: 5

;; QUESTION SECTION:

;s2048._domainkey.yahoo.com. IN TXT

;; ANSWER SECTION:

s2048._domainkey.yahoo.com. 61881 IN TXT "k=rsa\; t=y\; p=MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAuoWufgbWw58MczUGbMv176RaxdZGOMkQmn8OOJ/HGoQ6dalSMWiLaj8IMcHC1cubJx2gz" "iAPQHVPtFYayyLA4ayJUSNk10/uqfByiU8qiPCE4JSFrpxflhMIKV4bt+g1uHw7wLzguCf4YAoR6XxUKRsAoHuoF7M+v6bMZ/X1G+viWHkBl4UfgJQ6O8F1ckKKoZ5K" "qUkJH5pDaqbgs+F3PpyiAUQfB6EEzOA1KMPRWJGpzgPtKoukDcQuKUw9GAul7kSIyEcizqrbaUKNLGAmz0elkqRnzIsVpz6jdT1/YV5Ri6YUOQ5sN5bqNzZ8TxoQlkb" "VRy6eKOjUnoSSTmSAhwIDAQAB\; n=A 2048 bit key\;"Add the following to /etc/elasticsearch/jvm.options.d/custom.options:

-Xms2g

-Xmx2gAccording to official documentation you should avoid adding custom options directly in jvm.options.

In May 2019 Microsoft has made the new and improved App Registration portal generally available. For some time this new portal has been available under the Azure Active Directory > App registration (preview) menu in the Azure Portal. The old App Registration is still available under Azure Active Directory > App registration (legacy) but most likely it will be discontinued soon.

The ID token does no longer by default contains fields such as user principal name (UPN), email, first and last name, most likely to ensure that personal data is handled with more consideration. As a result, you must manually update the app registration’s manifest to ensure that ID tokens include the UPN, email, first and last name by adding these optional claims.

"optionalClaims": {

"idToken": [{

"name": "family_name",

"source": null,

"essential": false,

"additionalProperties": []

}, {

"name": "given_name",

"source": null,

"essential": false,

"additionalProperties": []

}, {

"name": "upn",

"source": null,

"essential": false,

"additionalProperties": []

}, {

"name": "email",

"source": null,

"essential": false,

"additionalProperties": []

}

],

"accessToken": [],

"saml2Token": []

},

First download latest firmware from Ubiquiti download page, then execute the following command on UniFi Cloud Key via SSH:

ubnt-systool fwupdate https://dl.ubnt.com/unifi/cloudkey/firmware/UCK/UCK.mtk7623.v0.13.10.e171d89.190327.1752.binIf your brand-new network is set up by UniFi Network iOS app. and using existing Ubiquiti account [email protected] for sync (the option Enable Local Login with UBNT Account will be enabled automatically if you login your Ubiquiti account during the setup), there’s what you will get:

First install growpart:

yum install cloud-utils-growpartCheck current disk info:

fdisk -l

Disk /dev/vda: 42.9 GB, 42949672960 bytes, 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x0008d73a

Device Boot Start End Blocks Id System

/dev/vda1 * 2048 41943039 20970496 83 LinuxCheck disk partition info:

df -h

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 20G 15G 4.5G 77% /

devtmpfs 486M 0 486M 0% /dev

tmpfs 496M 0 496M 0% /dev/shm

tmpfs 496M 460K 496M 1% /run

tmpfs 496M 0 496M 0% /sys/fs/cgroup

tmpfs 100M 0 100M 0% /run/user/0Run growpart on our device:

growpart /dev/vda 1

CHANGED: partition=1 start=2048 old: size=41940992 end=41943040 new: size=83883999,end=83886047Resize:

resize2fs /dev/vda1

resize2fs 1.42.9 (28-Dec-2013)

Filesystem at /dev/vda1 is mounted on /; on-line resizing required

old_desc_blocks = 2, new_desc_blocks = 3

The filesystem on /dev/vda1 is now 10485499 blocks long.Check if disk resized:

df -h

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 40G 15G 24G 38% /

devtmpfs 486M 0 486M 0% /dev

tmpfs 496M 0 496M 0% /dev/shm

tmpfs 496M 460K 496M 1% /run

tmpfs 496M 0 496M 0% /sys/fs/cgroup

tmpfs 100M 0 100M 0% /run/user/0The base directory is:

/volume1/@appstore/transmissionMost settings/config file and torrents are stored under:

/volume1/@appstore/transmission/var

/volume1/@appstore/transmission/var/torrentsTo edit these files, you need sudo permission. For example:

"watch-dir": "/volume2/data_2/torrents-watch",

"watch-dir-enabled": trueFirst you need to log into your admin account with PowerShell

Set-UnifiedGroup -Identity "GroupDisplayName" -EmailAddresses: @{Add ="[email protected]"}

Set-UnifiedGroup -Identity "GroupDisplayName" -PrimarySmtpAddress "[email protected]"

Set-UnifiedGroup -Identity "GroupDisplayName" -EmailAddresses: @{Remove="[email protected]"}Edit config file:

vi ~/.config/rtorrent/rtorrent.rcChange config:

system.file.max_size.set = 3000GHow to force SMB2, which actually doesn’t work for me:

10.14.3 – SMB3 Performance Issues – Force SMB2

Related discussion on Apple Communities

Computers Won’t Shut Down After Connecting To Server

Bug reports on Apple Radar:

How to submit sysdiagnose:

sudo sysdiagnoseNote: The sysdiagnose process can take 10 minutes to complete. Once finished, the folder /private/var/tmp/ should appear automatically in the Finder and the sysdiagnose file there will look similar to this:

sysdiagnose_2017.08.17_07-30-12-0700_10169.tar.gz

View active SMB connection status:

smbutil statshares -aaws route53 create-reusable-delegation-set --caller-reference ns-example-comOutput:

{

"Location": "https://route53.amazonaws.com/2013-04-01/delegationset/N3PIG1YNLUZGKS",

"DelegationSet": {

"Id": "/delegationset/N3PIG1YNLUZGKS",

"CallerReference": "ns-example-com",

"NameServers": [

"ns-30.awsdns-03.com",

"ns-1037.awsdns-01.org",

"ns-1693.awsdns-19.co.uk",

"ns-673.awsdns-20.net"

]

}

}Note down the delegation set ID:

/delegationset/N3PIG1YNLUZGKSdig +short ns-30.awsdns-03.com

dig +short ns-1037.awsdns-01.org

dig +short ns-1693.awsdns-19.co.uk

dig +short ns-673.awsdns-20.net

dig AAAA +short ns-30.awsdns-03.com

dig AAAA +short ns-1037.awsdns-01.org

dig AAAA +short ns-1693.awsdns-19.co.uk

dig AAAA +short ns-673.awsdns-20.netThen add these records with your domain registrar and in your current DNS providers. Set TTL to 60s.

aws route53 create-hosted-zone --caller-reference example-tld --name example.tld --delegation-set-id /delegationset/N3PIG1YNLUZGKSOutput:

{

"Location": "https://route53.amazonaws.com/2013-04-01/hostedzone/Z7RED47DZVVWP",

"HostedZone": {

"Id": "/hostedzone/Z7RED47DZVVWP",

"Name": "example.tld.",

"CallerReference": "example-tld",

"Config": {

"PrivateZone": false

},

"ResourceRecordSetCount": 2

},

"ChangeInfo": {

"Id": "/change/C2IAGSQG1G1LCZ",

"Status": "PENDING",

"SubmittedAt": "2019-03-10T13:10:53.358Z"

},

"DelegationSet": {

"Id": "/delegationset/N3PIG1YNLUZGKS",

"CallerReference": "ns-example-com",

"NameServers": [

"ns-30.awsdns-03.com",

"ns-1037.awsdns-01.org",

"ns-1693.awsdns-19.co.uk",

"ns-673.awsdns-20.net"

]

}

}Prepare to change name servers, first lower TTL for the following records:

sudo trimforce enableSource: Mac OS X 10.10.4 Supports TRIM for Third-Party SSD Hard Drives

I don’t know why the heck PayPal trying to hide this page that deep from the users. Here’s the URL:

First download virtualenv:

wget -O virtualenv-15.0.3.tar.gz https://github.com/pypa/virtualenv/archive/15.0.3.tar.gzExtract virtualenv:

tar xvf virtualenv-15.0.3.tar.gzCreate the environment:

python3 virtualenv-15.0.3/virtualenv.py --system-site-packages ~/awscli-ve/Alternatively, you can use the -p option to specify a version of Python other than the default:

python3 virtualenv-15.0.3/virtualenv.py --system-site-packages -p /usr/bin/python3.4 ~/awscli-veActivate your new virtual environment:

source ~/awscli-ve/bin/activateInstall the AWS CLI into your virtual environment:

(awscli-ve)~$ pip install --upgrade awscliTo exit your virtualenv:

deactivateOneDrive Free Client is “a complete tool to interact with OneDrive on Linux”.

Install the D language compiler:

curl -fsS https://dlang.org/install.sh | bash -s dmdRun the full source command provided by the output of the above install script.

Download OneDrive Free Client’s source:

git clone https://github.com/skilion/onedrive.gitChange to the source directory:

cd onedriveUse the following command to edit the prefix defined in Makefile:

sed -i 's|/usr/local|/home/username|g' MakefileCompile and install OneDrive Free Client:

make && make installDeactivate the source command:

deactivateAdd ~/bin to your PATH so that you can run binaries in ~/bin without specifying the path. Ignore this step if you have performed it for a different program.

echo "PATH=\$HOME/bin:\$PATH" >> ~/.bashrc && source ~/.bashrcYou can now run OneDrive Free Client with onedrive. To run it in the background, you can use:

screen -dmS onedrive onedrive -mRun the following command to create a sync list, which let onedrive only syncs specific directories:

vi ~/.config/onedrive/sync_listIn sync_list (one directory per line):

Apps/Datasets-1/

Datasets-Prepare/If you’re using shadowsocks-libev with simple-obfs and has failover enabled to an actual website (Nginx, Apache, etc.), then your proxy won’t work well V2Ray shadowsocks outbound, because extra data sent out from V2Ray will fallback to shadowsocks failover server you defined.

A simple solution is to disabled failover option on a dedicated shadowsocks server for V2Ray.

bootrec /fixmbr and bootrec /fixboot. Then close the Command Prompt and choose Continue to continue login to Windowsdiskmgmt to remove the volumes from UbuntuYou can also use GUI solution like EasyBCD to remove the Ubuntu boot item from Windows Boot Manager.

https://api.telegram.org/bot<API-access-token>/getUpdates?offset=0id inside the chat JSON object. In the example below, the chat ID is 123456789.{

"ok":true,

"result":[

{

"update_id":XXXXXXXXX,

"message":{

"message_id":2,

"from":{

"id":123456789,

"first_name":"Mushroom",

"last_name":"Kap"

},

"chat":{

"id":123456789,

"first_name":"Mushroom",

"last_name":"Kap",

"type":"private"

},

"date":1487183963,

"text":"hi"

}

}

]

}

If you have jq installed on your system, youcan also use the folowing command:

curl https://api.telegram.org/bot<API-access-token>/getUpdates?offset=0 | jq -r .message.chat.idYou may find the following issue if you run a UniFi setup:

tail -f /srv/unifi/logs/server.log

...

Wed Jun 27 21:52:34.250 [initandlisten] ERROR: mmap private failed with out of memory. You are using a 32-bit build and probably need to upgrade to 64

After googling for it you may find a Ubiquiti staff post a prune script on their forum.

But you may find that script can only be executed while the MongoDB is running. However no one mentioned how to solve it when you can’t start your MongoDB. Here’s the solution, actually you don’t even need to repair your database in this situation:

Make sure unifi service is stopped:

systemctl stop unifi

Download the prune script from Ubiquity support

wget https://ubnt.zendesk.com/hc/article_attachments/115024095828/mongo_prune_js.js

Start a new SSH session, run MongoDB without --journal, all others parameters are copied from the unifi service:

mongod --dbpath /usr/lib/unifi/data/db --port 27117 --unixSocketPrefix /usr/lib/unifi/run --noprealloc --nohttpinterface --smallfiles --bind_ip 127.0.0.1

Run the prune script:

mongo --port 27117 < mongo_prune_js.js

You should get the similar output:

MongoDB shell version: 2.4.10

connecting to: 127.0.0.1:27117/test

[dryrun] pruning data older than 7 days (1541581969480)...

switched to db ace

[dryrun] pruning 12404 entries (total 12404) from alarm...

[dryrun] pruning 16036 entries (total 16127) from event...

[dryrun] pruning 76 entries (total 77) from guest...

[dryrun] pruning 24941 entries (total 25070) from rogue...

[dryrun] pruning 365 entries (total 379) from user...

[dryrun] pruning 0 entries (total 10) from voucher...

switched to db ace_stat

[dryrun] pruning 0 entries (total 313) from stat_5minutes...

[dryrun] pruning 21717 entries (total 22058) from stat_archive...

[dryrun] pruning 715 entries (total 736) from stat_daily...

[dryrun] pruning 3655 entries (total 5681) from stat_dpi...

[dryrun] pruning 15583 entries (total 16050) from stat_hourly...

[dryrun] pruning 372 entries (total 382) from stat_life...

[dryrun] pruning 0 entries (total 0) from stat_minute...

[dryrun] pruning 56 entries (total 56) from stat_monthly...

bye

Then edit the prune script and rerun the prune script with dryrun=false:

MongoDB shell version: 2.4.10

connecting to: 127.0.0.1:27117/test

pruning data older than 7 days (1541582296632)...

switched to db ace

pruning 12404 entries (total 12404) from alarm...

pruning 16036 entries (total 16127) from event...

pruning 76 entries (total 77) from guest...

pruning 24941 entries (total 25070) from rogue...

pruning 365 entries (total 379) from user...

pruning 0 entries (total 10) from voucher...

{ "ok" : 1 }

{ "ok" : 1 }

switched to db ace_stat

pruning 0 entries (total 313) from stat_5minutes...

pruning 21717 entries (total 22058) from stat_archive...

pruning 715 entries (total 736) from stat_daily...

pruning 3655 entries (total 5681) from stat_dpi...

pruning 15583 entries (total 16050) from stat_hourly...

pruning 372 entries (total 382) from stat_life...

pruning 0 entries (total 0) from stat_minute...

pruning 56 entries (total 56) from stat_monthly...

{ "ok" : 1 }

{ "ok" : 1 }

bye

Start the unifi service

systemctl start unifi

The root cause of this issue is that Cloud Key is currently running on ARMv7, a 32-bit based custom Debian system. so MongoDB cannot handle data larger than 2 GB. I haven’t tried the Cloud Key 2 and 2 Plus I hope they’re ARMv8 based. At the moment you can limit data retention as a workaround.

cd /opt/zammad/app/assets/stylesheets/custom/

vi custom.css

# Editing...

zammad run rake assets:precompile

systemctl restart zammad-web

1339 failing background jobs.

Failed to run background job #1 ‘BackgroundJobSearchIndex’ 10 time(s) with 228 attempt(s).

Run zammad run rails c then:

items = SearchIndexBackend.search('preferences.notification_sound.enabled:*', 3000, 'User')

items.each {|item|

next if !item[:id]

user = User.find_by(id: item[:id])

next if !user

next if !user.preferences

next if !user.preferences[:notification_sound]

next if !user.preferences[:notification_sound][:enabled]

if user.preferences[:notification_sound][:enabled] == 'true'

user.preferences[:notification_sound][:enabled] = true

user.save!

next

end

next if user.preferences[:notification_sound][:enabled] != 'false'

user.preferences[:notification_sound][:enabled] = false

user.save!

next

}

Delayed::Job.all.each {|job|

Delayed::Worker.new.run(job)

}

Further reading: Failing background jobs.;Failed to run background job #1 ‘BackgroundJobSearchIndex’ 10 time(s)

Zammad can actually work very well without Elasticsearch for small amount of tickets. Please note, after disabling Elasticsearch, you may see many BackgroundJobSearchIndex errors like this, so use it at your own risk.

zammad run rails r "Setting.set('es_url', '')" # set it empty

zammad run rake searchindex:rebuild

systemctl stop elasticsearch

systemctl disable elasticsearch

systemctl mask elasticsearch Further reading: Set up Elasticsearch – Zammad Docs

Just adding -XX:-AssumeMP to /etc/elasticsearch/jvm.options

This is a “hidden” feature that can only be added via PowerShell at the moment. And web admin portal won’t reflect the changes you made via PowerShell. That means you can only view alias for Office 365 Group from the following PowerShell cmdlet.

First log into your admin account with PowerShell

Set-UnifiedGroup –Identity [email protected] –EmailAddresses @{Add="[email protected]"}

Check if it works

Get-UnifiedGroup –Identity [email protected] | FL EmailAddresses

EmailAddresses : {smtp:[email protected], SPO:SPO_fa5eb50c-147e-4715-b64b-76af8be79767@SPO_f0712c15-1102-49c4-945a-7eda01ce10ff, smtp:[email protected], SMTP:[email protected]}

Connect to Exchange Online PowerShell – Microsoft Docs

Set-ExecutionPolicy RemoteSigned # Windows only

$UserCredential = Get-Credential

$Session = New-PSSession -ConfigurationName Microsoft.Exchange -ConnectionUri https://outlook.office365.com/powershell-liveid/ -Credential $UserCredential -Authentication Basic -AllowRedirection

Import-PSSession $Session -DisableNameChecking

Remove-PSSession $Session # logout

This is a known issue (bug) with USG 4.4.28.* firmware confirmed on official beta forum, the error would be like:

Sep 26 22:38:55 main-router mcad: ace_reporter.process_inform_response(): Failed to get the decrypted data from custom alert response#012

Sep 26 22:38:55 main-router mcad: ace_reporter.reporter_fail(): Decrypt Error (http://192.168.1.10:8080/inform)

Sep 26 22:39:10 main-router mcad: mcagent_data.data_decrypt(): header too small. size=0, should be=40

Sep 26 22:39:10 main-router mcad: ace_reporter.process_inform_response(): Failed to get the decrypted data from custom alert response#012

Sep 26 22:39:10 main-router mcad: ace_reporter.reporter_fail(): Decrypt Error (http://192.168.1.10:8080/inform)

Sep 26 22:54:31 main-router mcad: mcagent_data.data_decrypt(): header too small. size=0, should be=40

Sep 26 22:54:31 main-router mcad: ace_reporter.process_inform_response(): Failed to get the decrypted data from custom alert response#012

Solutions (try them in the following order):

unifi-util patchMine got everything back to work after resetting the USG.

Some tips:

You can run info to get some basic info what’s going on with your USG:

user@main-router:~$ info

Model: UniFi-Gateway-4

Version: 4.4.29.5124212

MAC Address: 78:8a:20:7c:ba:1d

IP Address: 11.22.33.44

Hostname: main-router

Uptime: 192 seconds

Status: Connected (http://unifi:8080/inform)

This guide will talk about rebuilding a failed RAID 1 disk with WD My Book Duo on macOS, it should also works on Thunderbolt Duo or other RAID 1 setup.

Many other guides only tell you how to replace the whole two disks without restoring / rebuilding data for this common situation:

In a common case, your RAID 1 setup could fail with only one defective disk, while the other is online. If you see this status on your macOS:

External-Raid, rename it to something like External-Raid-Rebuild or just some names different than your original, this is the most important step to make sure your RAID set wouldn’t be using after entering macOS system.Other notes:

This is not the only way to rebuild your RAID with a failed disk. According to WD documentation, you can power on your My Book Duo without connecting to macOS (remove the thunderbolt cable), then the My Book Duo should rebuild it automatically. However, it’s really hard to know when the rebuild process will finish, there’s no special indicator status for this situation, so I prefer rebuilding in macOS. This could be the best method for me.

sudo vi /etc/services

Search 5900 and edit it.

sudo launchctl unload /System/Library/LaunchDaemons/com.apple.screensharing.plist

sudo launchctl load /System/Library/LaunchDaemons/com.apple.screensharing.plist

It was available at http://www.apple.com/business/docs/Autofs.pdf

$ youtube-dl https://youtu.be/LB_X_GgNXMM -F

Output:

youtube: LB_X_GgNXMM: Downloading webpage

youtube: LB_X_GgNXMM: Downloading video info webpage

[info] Available formats for LB_X_GgNXMM:

format code extension resolution note

249 webm audio only DASH audio 56k , opus @ 50k, 201.84KiB

250 webm audio only DASH audio 71k , opus @ 70k, 267.21KiB

140 m4a audio only DASH audio 127k , m4a_dash container, mp4a.40.2@128k, 547.96KiB

171 webm audio only DASH audio 131k , vorbis@128k, 547.52KiB

251 webm audio only DASH audio 135k , opus @160k, 534.35KiB

160 mp4 256x144 144p 69k , avc1.4d400c, 24fps, video only, 221.40KiB

278 webm 256x144 144p 95k , webm container, vp9, 24fps, video only, 387.03KiB

242 webm 426x240 240p 186k , vp9, 24fps, video only, 623.64KiB

133 mp4 426x240 240p 213k , avc1.4d4015, 24fps, video only, 618.98KiB

243 webm 640x360 360p 416k , vp9, 24fps, video only, 1.29MiB

134 mp4 640x360 360p 440k , avc1.4d401e, 24fps, video only, 1.26MiB

135 mp4 854x480 480p 714k , avc1.4d401e, 24fps, video only, 2.08MiB

244 webm 854x480 480p 724k , vp9, 24fps, video only, 2.22MiB

136 mp4 1280x720 720p 1001k , avc1.4d401f, 24fps, video only, 3.06MiB

247 webm 1280x720 720p 1122k , vp9, 24fps, video only, 3.39MiB

137 mp4 1920x1080 1080p 1679k , avc1.640028, 24fps, video only, 4.86MiB

248 webm 1920x1080 1080p 1998k , vp9, 24fps, video only, 5.13MiB

17 3gp 176x144 small , mp4v.20.3, mp4a.40.2@ 24k, 348.19KiB

36 3gp 320x180 small , mp4v.20.3, mp4a.40.2, 962.59KiB

18 mp4 640x360 medium , avc1.42001E, mp4a.40.2@ 96k, 2.11MiB

43 webm 640x360 medium , vp8.0, vorbis@128k, 3.46MiB

22 mp4 1280x720 hd720 , avc1.64001F, mp4a.40.2@192k (best)

$ youtube-dl https://youtu.be/LB_X_GgNXMM -f 137+140

Source EdgeRouter – L2TP IPsec VPN Server

Applicable to the latest EdgeOS firmware on all EdgeRouter models using CLI mode. L2TP setup is not configurable on web interface.

configure

set vpn l2tp remote-access ipsec-settings authentication mode pre-shared-secret

set vpn l2tp remote-access ipsec-settings authentication pre-shared-secret <secret>

set vpn l2tp remote-access authentication mode local

set vpn l2tp remote-access authentication local-users username <username> password <secret>

set vpn l2tp remote-access client-ip-pool start 192.168.100.240

set vpn l2tp remote-access client-ip-pool stop 192.168.100.249

set vpn l2tp remote-access dns-servers server-1 <ip-address>

set vpn l2tp remote-access dns-servers server-2 <ip-address>

Configure only one of the following statements. Decide on which command is best for your situation using these options:

set vpn l2tp remote-access dhcp-interface eth0

set vpn l2tp remote-access outside-address 203.0.113.1

set vpn l2tp remote-access outside-address 0.0.0.0

set vpn ipsec ipsec-interfaces interface eth0

set vpn l2tp remote-access mtu <mtu-value>

commit ; save

You can verify the VPN settings using the following commands from operational mode:

show firewall name WAN_LOCAL statistics

show vpn remote-access

show vpn ipsec sa

show interfaces

show log | match 'xl2tpd|pppd'

UPDATE `wp_users` SET `user_pass`= MD5('yourpassword') WHERE `user_login`='yourusername';

You can try this method if you meet one of the following situation:

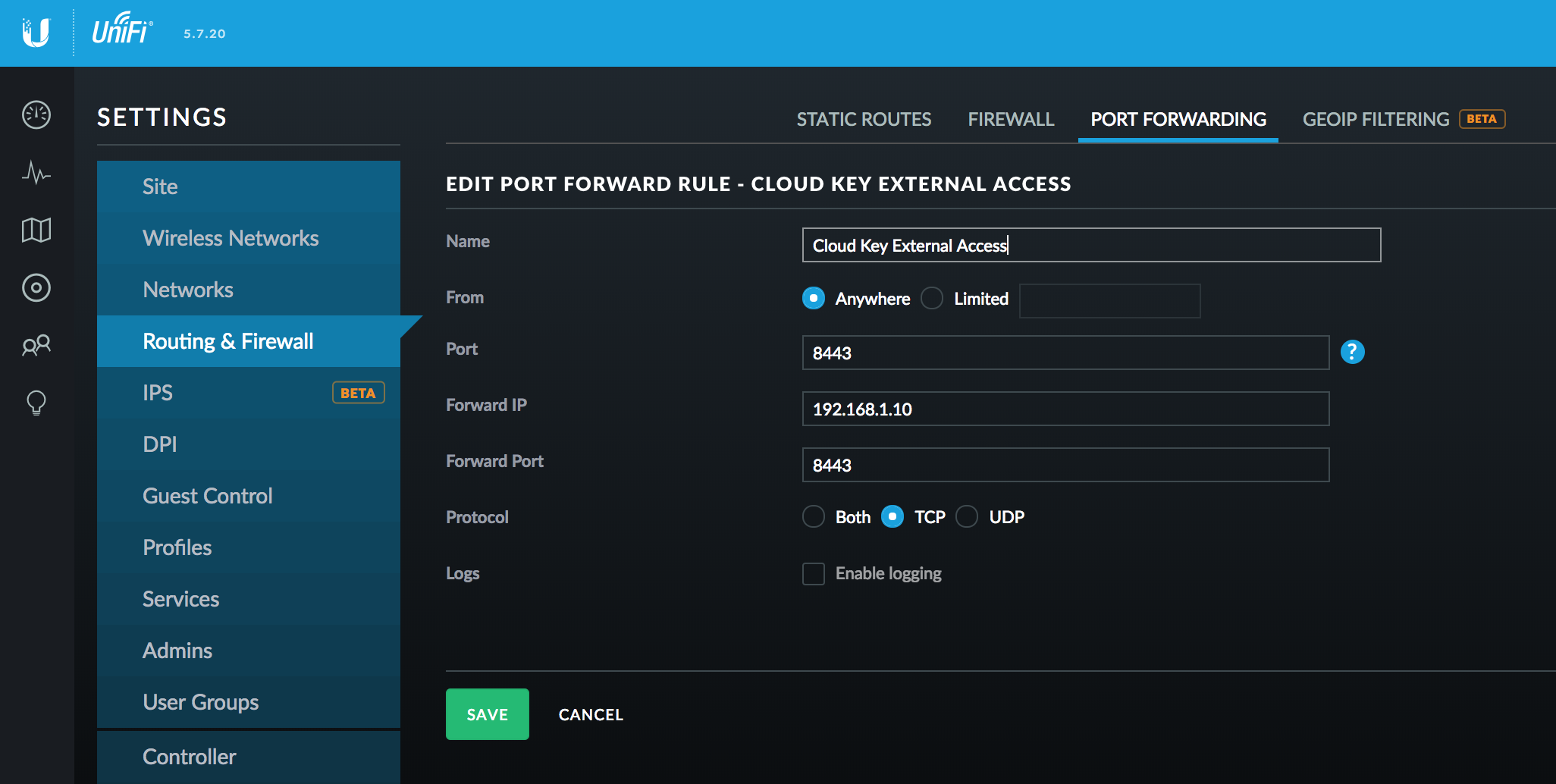

In general, you can access your Unifi Secuiry Gateway (USG) via your public IP (USG_IP), so in my method you need to forward your UCK management dashboard (UCK_IP:8443 by default) traffic to your public IP. it’s under Settings – Routing & Firewall – Port Forwarding. Enter your Cloud Key address IP as Forward IP, use default 8443 as Port and Forward Port. You can also limit from destination to your server IP for security best practice.

Use the following Nginx configuration, please note that this is a simplified version.

server {

listen 80;

listen [::]:80;

server_name unifi.example.com;

return 301 https://$server_name$request_uri;

}

server {

listen 443 ssl http2;

listen [::]:443 ssl http2;

# To avoid unreachable port error when launching dashboard from unifi.ubnt.com

listen 8443 ssl http2;

listen [::]:8443 ssl http2;

server_name unifi.example.com;

# Certificate

ssl_certificate /etc/nginx/ssl/unifi.example.com.crt;

ssl_certificate_key /etc/nginx/ssl/unifi.example.com.key;

location /wss {

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

proxy_set_header CLIENT_IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_read_timeout 86400;

proxy_pass https://USG_IP:8443;

}

location / {

proxy_set_header Host $http_host;

proxy_set_header CLIENT_IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_read_timeout 180;

proxy_pass https://USG_IP:8443;

}

}

Point your unifi.example.com to your public IP. Access it in your browser and everything now should works!

This article describes how to access the emergency recovery UI and recover a Cloud Key. From this UI you can reset it to factory defaults, reboot, shutoff and upgrade the firmware. To upgrade the firmware you will need a firmware binary for the UniFi Cloud Key.

Source: UniFi – Cloud Key Emergency Recovery UI – Ubiquiti Networks Support and Help Center

Install it on macOS:

.p7b) or individual certs (zipped) from DigiCertInstall it on iOS:

.p12 (Make sure to export your cert into .p12, this will contain private key for iOS to send encrypted emails)Temporary solution:

$ ln -sf /usr/lib64/libmbedcrypto.so.2.7.0 /usr/lib64/libmbedcrypto.so.0

The AWS CLI now supports the –query parameter which takes a JMESPath expressions.

This means you can sum the size values given by list-objects using sum(Contents[].Size) and count like length(Contents[]).

This can be be run using the official AWS CLI as below and was introduced in Feb 2014

aws s3api list-objects --bucket BUCKETNAME --output json --query "[sum(Contents[].Size), length(Contents[])]"

Source: How can I get the size of an Amazon S3 bucket? – Server Fault

cd /tmp/

wget http://update.aegis.aliyun.com/download/uninstall.sh

chmod +x uninstall.sh

./uninstall.sh

wget http://update.aegis.aliyun.com/download/quartz_uninstall.sh

chmod +x quartz_uninstall.sh

./quartz_uninstall.sh

Remove leftovers

pkill aliyun-service

systemctl disable aliyun

rm -fr /etc/init.d/agentwatch /usr/sbin/aliyun-service

rm -rf /usr/local/aegis

rm /usr/sbin/aliyun-service

rm /usr/sbin/aliyun-service.backup

rm /usr/sbin/aliyun_installer

rm /etc/systemd/system/aliyun.service

rm /lib/systemd/system/aliyun.serviceBlock aliyundun IPs (IP update list from Aliyun)

iptables -I INPUT -s 140.205.201.0/28 -j DROP

iptables -I INPUT -s 140.205.201.16/29 -j DROP

iptables -I INPUT -s 140.205.201.32/28 -j DROP

iptables -I INPUT -s 140.205.225.192/29 -j DROP

iptables -I INPUT -s 140.205.225.200/30 -j DROP

iptables -I INPUT -s 140.205.225.184/29 -j DROP

iptables -I INPUT -s 140.205.225.183/32 -j DROP

iptables -I INPUT -s 140.205.225.206/32 -j DROP

iptables -I INPUT -s 140.205.225.205/32 -j DROP

iptables -I INPUT -s 140.205.225.195/32 -j DROP

iptables -I INPUT -s 140.205.225.204/32 -j DROP

iptables -I INPUT -s 106.11.224.0/26 -j DROP

iptables -I INPUT -s 106.11.224.64/26 -j DROP

iptables -I INPUT -s 106.11.224.128/26 -j DROP

iptables -I INPUT -s 106.11.224.192/26 -j DROP

iptables -I INPUT -s 106.11.222.64/26 -j DROP

iptables -I INPUT -s 106.11.222.128/26 -j DROP

iptables -I INPUT -s 106.11.222.192/26 -j DROP

iptables -I INPUT -s 106.11.223.0/26 -j DROP

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.201.0/28' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.201.16/29' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.201.32/28' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.225.192/29' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.225.200/30' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.225.184/29' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.225.183/32' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.225.206/32' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.225.205/32' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.225.195/32' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='140.205.225.204/32' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='106.11.224.0/26' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='106.11.224.64/26' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='106.11.224.128/26' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='106.11.224.192/26' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='106.11.222.64/26' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='106.11.222.128/26' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='106.11.222.192/26' reject"

firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='106.11.223.0/26' reject"

Nginx:

client_max_body_sizePHP:

post_max_sizeupload_max_filesizeList files under pdf:

$ s3cmd ls --requester-pays s3://arxiv/pdf

DIR s3://arxiv/pdf/

List files under pdf:

$ s3cmd ls --requester-pays s3://arxiv/pdf/\*

2010-07-29 19:56 526202880 s3://arxiv/pdf/arXiv_pdf_0001_001.tar

2010-07-29 20:08 138854400 s3://arxiv/pdf/arXiv_pdf_0001_002.tar

2010-07-29 20:14 525742080 s3://arxiv/pdf/arXiv_pdf_0002_001.tar

2010-07-29 20:33 156743680 s3://arxiv/pdf/arXiv_pdf_0002_002.tar

2010-07-29 20:38 525731840 s3://arxiv/pdf/arXiv_pdf_0003_001.tar

2010-07-29 20:52 187607040 s3://arxiv/pdf/arXiv_pdf_0003_002.tar

2010-07-29 20:58 525731840 s3://arxiv/pdf/arXiv_pdf_0004_001.tar

2010-07-29 21:11 44851200 s3://arxiv/pdf/arXiv_pdf_0004_002.tar

2010-07-29 21:14 526305280 s3://arxiv/pdf/arXiv_pdf_0005_001.tar

2010-07-29 21:27 234711040 s3://arxiv/pdf/arXiv_pdf_0005_002.tar

...

Get all files under pdf:

$ s3cmd get --requester-pays s3://arxiv/pdf/\*

List all content to text file:

$ s3cmd ls --requester-pays s3://arxiv/src/\* > all_files.txt

Calculate file size:

$ awk '{s += $3} END { print "sum is", s/1000000000, "GB, average is", s/NR }' all_files.txt

sum is 844.626 GB, average is 4.80447e+08

Install dependencies:

$ yum install cvs git gcc automake autoconf libtool -y

Download Tsunami UDP:

$ cd /tmp

$ cvs -z3 -d:pserver:[email protected]:/cvsroot/tsunami-udp co -P tsunami-udp

$ cd tsunami-udp

$ ./recompile.sh

$ make install

Then on the server side:

$ tsunamid --port 46224 * # (Serves all files from current directory for copy)

On the client side:

$ tsunami connect <server_ip> get *

Transfer dataset back to S3:

aws s3 cp --recursive /mnt/bigephemeral s3://<your-new-bucket>/

Limitations:

Refs:

This answer comes by way of piecing together bits from other answers – so to the previous contributors…thank you because I would not have figured this out.This example is based on the RHEL 7 AMI (Amazon Managed Image) 3.10.0-229.el7.x86_64.So by default as mentioned above the optional repository is not enabled. Don’t add another repo.d file as it already exists just that it is disabled.To enable first you need the name. I used grep to do this:grep -B1 -i optional /etc/yum.repos.d/*above each name will be the repo id enclosed in [ ] look for the optional not optional-sourceEnable the optional repo:yum-config-manager –enable Refresh the yum cache (not sure if this is necessary but it doesn’t hurt):sudo yum makecacheFinally, you can install ruby-devel:yum install ruby-develDepending on your user’s permissions you may need to use sudo.

$ some_cmd > some_cmd.log 2>&1 &

Source: https://unix.stackexchange.com/a/106641

Nowadays it’s really simple to install Transmission on CentOS 7 or EL distro. First you need to install EPEL packages:

$ yum install epel-release

$ yum -y update

…or use the following command if you’re running Red Hat Enterprise Linux:

$ yum install https://dl.fedoraproject.org/pub/epel/epel-release-latest-$(rpm -E '%{rhel}').noarch.rpm

Install Transmission:

$ yum install transmission-cli transmission-common transmission-daemon

Create storage directory:

$ mkdir /ebs-data/transmission/

$ chown -R transmission.transmission /ebs-data/transmission/

Start and stop the Transmission to auto generate configs:

$ systemctl start transmission-daemon.service

$ systemctl stop transmission-daemon.service

To edit the config, you MUST first stop the daemon, otherwise the config will be overwritten after you restart the daemon:

$ systemctl stop transmission-daemon.service

$ var /var/lib/transmission/.config/transmission-daemon/settings.json

Edit config:

"download-dir": "/ebs-data/transmission",

"incomplete-dir": "/ebs-data/transmission",

"rpc-authentication-required": true,

"rpc-enabled": true,

"rpc-password": "my_password",

"rpc-username": "my_user",

"rpc-whitelist": "0.0.0.0",

"rpc-whitelist-enabled": false,

Save and start daemon:

$ systemctl start transmission-daemon.service

Access via your browser:

$ open http://localhost:9091/transmission/web/

View all available volumes:

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

xvda 202:0 0 10G 0 disk

├─xvda1 202:1 0 1M 0 part

└─xvda2 202:2 0 10G 0 part /

xvdf 202:80 0 3.9T 0 disk

$ file -s /dev/xvdf

/dev/xvdf: data

If returns data it means the volume is empty. We need to format it first:

$ mkfs -t ext4 /dev/xvdf

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

262144000 inodes, 1048576000 blocks

52428800 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=3196059648

32000 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968,

102400000, 214990848, 512000000, 550731776, 644972544

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

Create a new directory and mount it to EBS volume:

$ cd / && mkdir ebs-data

$ mount /dev/xvdf /ebs-data/

Check volume mount:

$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda2 10G 878M 9.2G 9% /

devtmpfs 476M 0 476M 0% /dev

tmpfs 496M 0 496M 0% /dev/shm

tmpfs 496M 13M 483M 3% /run

tmpfs 496M 0 496M 0% /sys/fs/cgroup

tmpfs 100M 0 100M 0% /run/user/1000

tmpfs 100M 0 100M 0% /run/user/0

/dev/xvdf 3.9T 89M 3.7T 1% /ebs-data

In order to make it mount automatically after each reboot, we need to edit /etc/fstab, first make a backup:

$ cp /etc/fstab /etc/fstab.orig

Find the UUID for the volume you need to mount:

$ ls -al /dev/disk/by-uuid/

total 0

drwxr-xr-x. 2 root root 80 Nov 25 05:04 .

drwxr-xr-x. 4 root root 80 Nov 25 04:40 ..

lrwxrwxrwx. 1 root root 11 Nov 25 04:40 de4dfe96-23df-4bb9-ad5e-08472e7d1866 -> ../../xvda2

lrwxrwxrwx. 1 root root 10 Nov 25 05:04 e54af798-14df-419d-aeb7-bd1b4d583886 -> ../../xvdf

Then edit /etc/fstab:

$ vi /etc/fstab

with:

#

# /etc/fstab

# Created by anaconda on Tue Jul 11 15:57:39 2017

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

UUID=de4dfe96-23df-4bb9-ad5e-08472e7d1866 / xfs defaults 0 0

UUID=e54af798-14df-419d-aeb7-bd1b4d583886 /ebs-data ext4 defaults,nofail 0 2

Check if fstab has any error:

$ mount -a

curl -v -s -o - -X OPTIONS https://www.google.com/

If you’re running Windows 10 Pro or Enterprise 64-bit edition, here we take a look at setting up a Virtual Machine using the built in Hyper-V technology.